A new study from Anthropic examines how university students are using its language model Claude in daily academic work. The analysis reveals discipline-specific usage patterns and raises concerns about the impact of AI on student learning and academic integrity.

The research team started by sifting through a staggering one million conversations from users with university email addresses. After filtering for relevance, they landed on 574,740 academic chats over an 18-day window.

Usage skewed heavily toward students in STEM fields. According to the data, computer science students accounted for 38.6 percent of all users, despite making up only 5.4 percent of the U.S. student population. This group was significantly overrepresented in the data set.

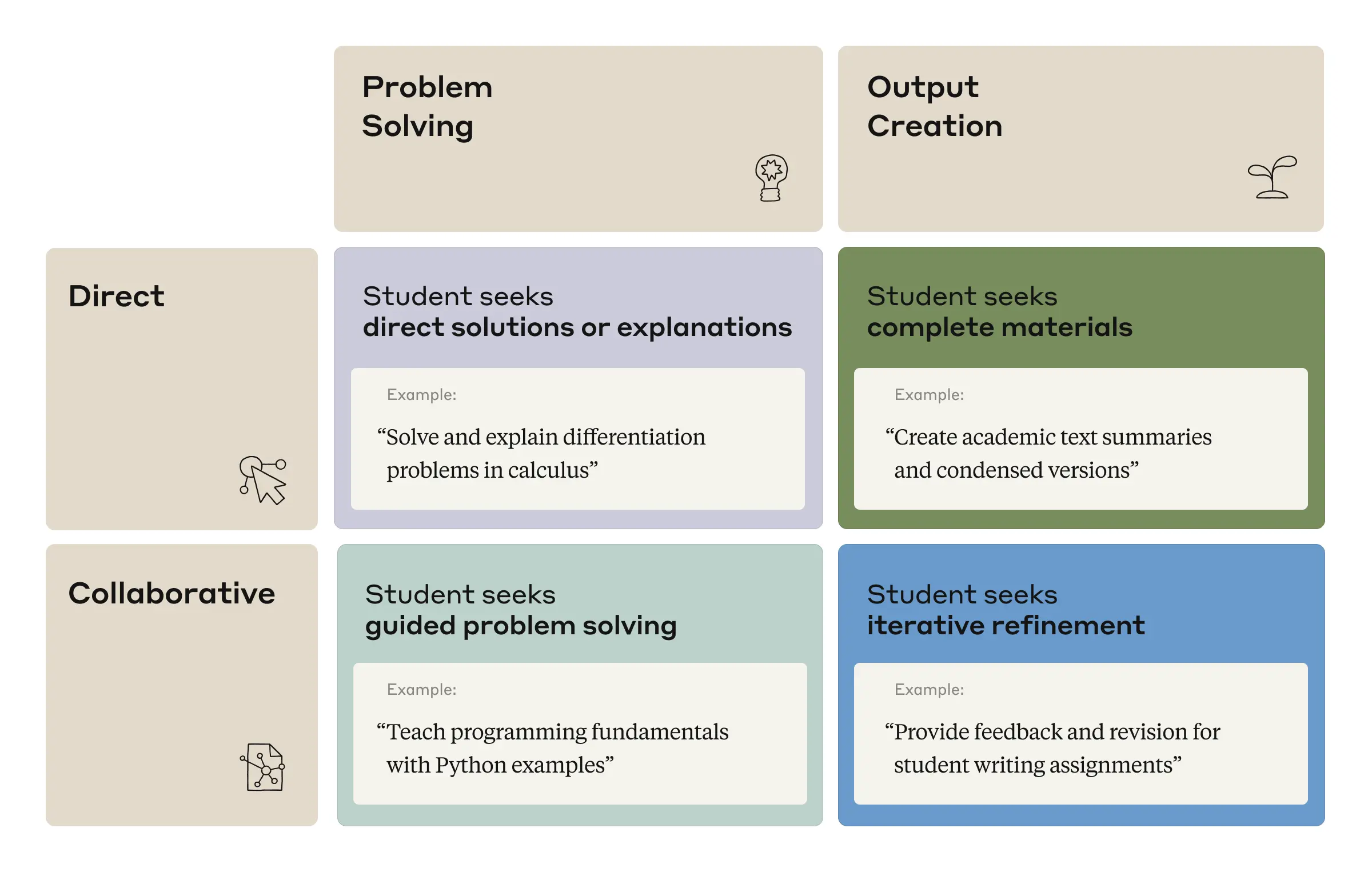

Anthropic’s researchers categorized how students interact with Claude into four main patterns: direct and collaborative conversations, each focused either on solving problems or generating content. Each mode made up between 23% and 29% of conversations, but nearly half (47%) were direct, minimal-effort interactions. In these, students seemed to hand off the heavy lifting to Claude with little back-and-forth.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

When AI does the thinking

Some usage patterns flagged by Anthropic are, frankly, questionable. Students are letting Claude handle multiple-choice questions on machine learning, generate direct answers for English tests, or rephrase marketing and business assignments to dodge plagiarism detectors. Even in more collaborative sessions—like step-by-step explanations of probability problems—the AI is still absorbing much of the cognitive load.

Anthropic categorizes interactions into direct solutions, collaborative problem-solving, explanation-seeking, and iterative content development. | Image: Anthropic

Anthropic categorizes interactions into direct solutions, collaborative problem-solving, explanation-seeking, and iterative content development. | Image: AnthropicThe challenge, as the researchers note, is that without knowing the context for each interaction, it’s difficult to draw a firm line between genuine learning and outright cheating. Using AI to check your work on practice exercises could be smart self-study; using it to complete graded homework is a different story.

What students are mostly delegating to AI are higher-order thinking tasks. Looking at Bloom’s Taxonomy, 39.8% of prompts fell into the "Create" category and 30.2% into "Analyze." Simpler actions like "Apply" (10.9%) and "Understand" (10%) were much less common.

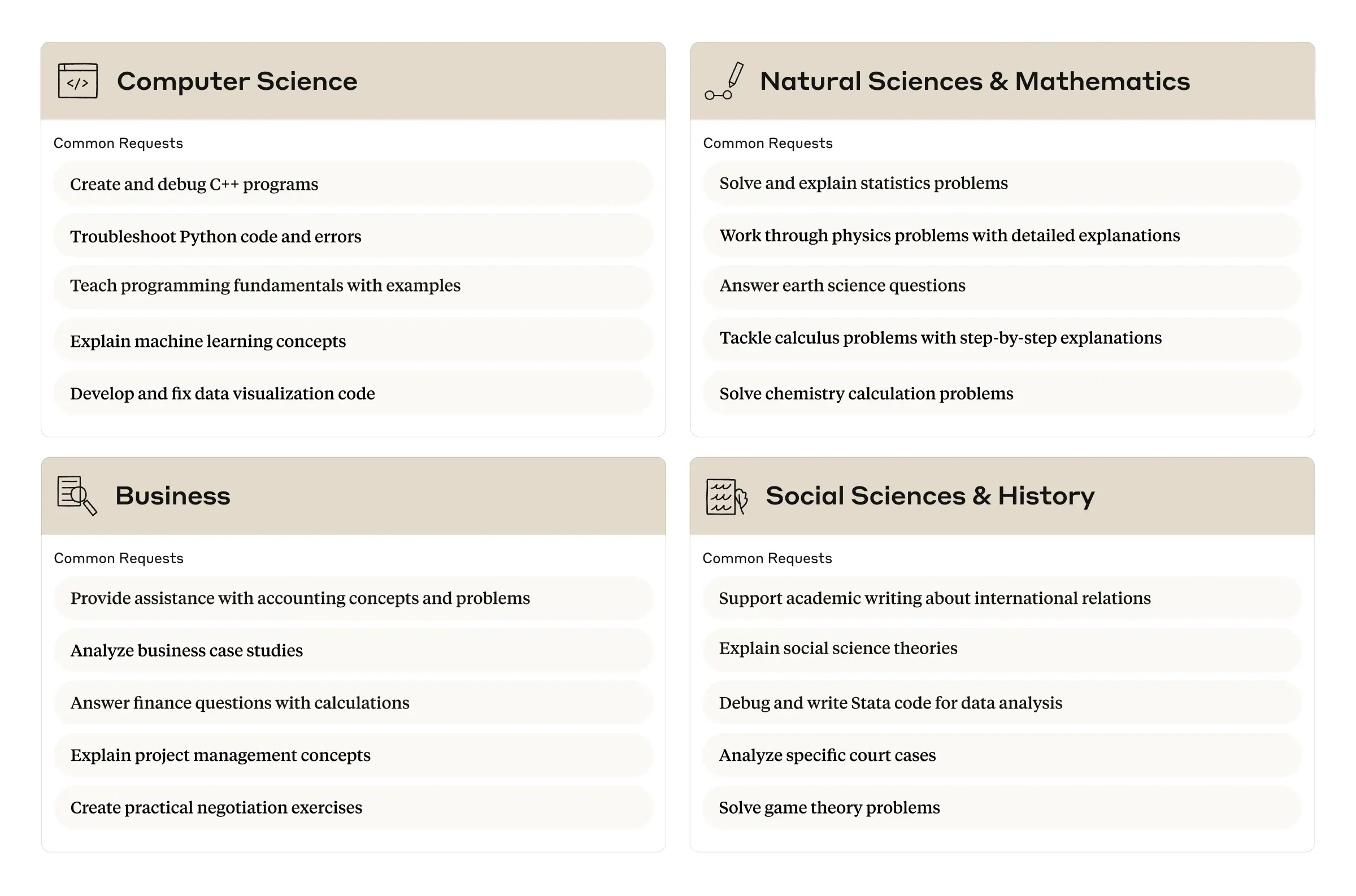

Common computer science queries included debugging, concept explanations, and data visualization. | Image: Anthropic

Common computer science queries included debugging, concept explanations, and data visualization. | Image: AnthropicPatterns also diverge by discipline. Science and math students mostly use AI for problem-solving—think guided explanations. Educators use Claude primarily to create teaching materials and lesson plans (a whopping 74.4% of use cases there).

The study comes with caveats. The analysis covered a short time frame and skewed toward early adopters, which might not reflect broader student behavior. The conversation-classification method could also mislabel interactions; for example, some chats from university staff might have been lumped in with student data. Anthropic’s team calls for more research into how AI is really influencing learning—and how schools should respond.

Recommendation

Big AI's big campus push

Meanwhile, AI companies are racing to plant their flags in higher education. Anthropic just launched Claude for Education, a campus-focused offering with special learning modes. Schools like Northeastern University, the London School of Economics, and Champlain College are already rolling it out, and Anthropic has plans to tie it into existing platforms like Canvas LMS.

OpenAI isn’t sitting still either. Their ChatGPT Edu launched in May 2024, giving universities discounted access to the latest models, along with features like data analysis and document summarization. Oxford, Wharton, and Columbia are already using it for tutoring, assessments, and admin work.

The endgame here is pretty transparent: get students using AI—specifically, their AI—early and often. Once these students graduate and enter the workforce, the hope is they’ll keep these tools in their daily workflow, spreading both AI adoption and brand loyalty in the process.

As language models become more deeply embedded in academic workflows, the line between legitimate educational support and inappropriate shortcutting continues to blur. The next big question is how institutions will draw—and enforce—that line.

2 hours ago

1

2 hours ago

1