A new method called "Chain of Draft" (CoD) helps AI models complete complex tasks using significantly fewer words and greater speed, while maintaining accuracy levels comparable to existing approaches.

CoD generates concise yet informative intermediate results, solving tasks with up to 92.4% fewer words compared to the established Chain of Thought (CoT) method—without any loss in accuracy. The inspiration for CoD comes from human behavior: rather than detailing every thought, people often jot down only essential points in brief bullet form. CoD mimics this strategy.

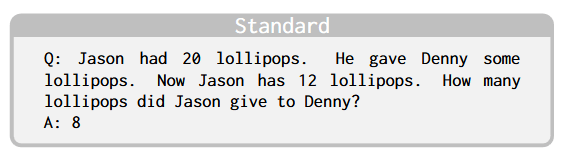

Detailed reasoning steps using the Chain of Thought method. | Image: Xu et al.

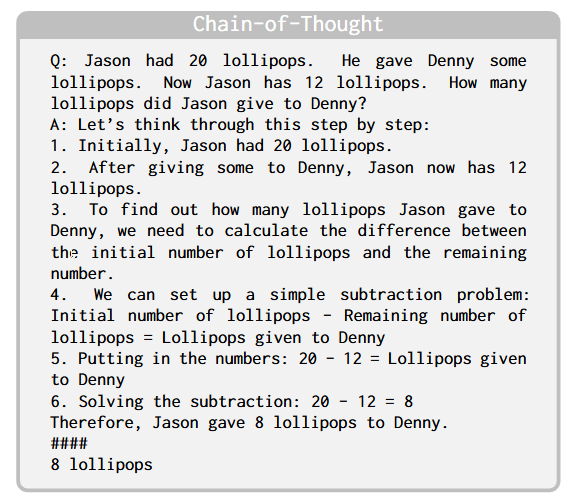

Detailed reasoning steps using the Chain of Thought method. | Image: Xu et al. Significantly shorter reasoning steps using Chain of Draft. | Image: Xu et al.

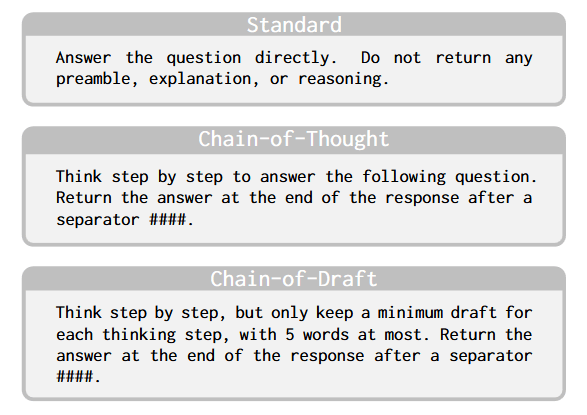

Significantly shorter reasoning steps using Chain of Draft. | Image: Xu et al.While the test prompts remain identical across all three examples, the difference lies in the system prompt. For CoD, researchers modified a chain-of-thought (CoT) prompt to limit each step to a maximum of five words.

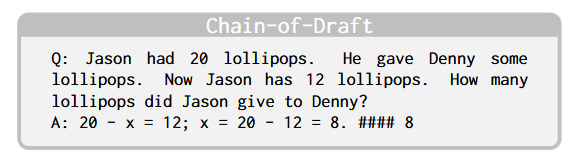

Instructions given to the AI model for Chain of Draft prompting. | Image: Xu et al.

Instructions given to the AI model for Chain of Draft prompting. | Image: Xu et al.Short prompts deliver similar accuracy with fewer resources

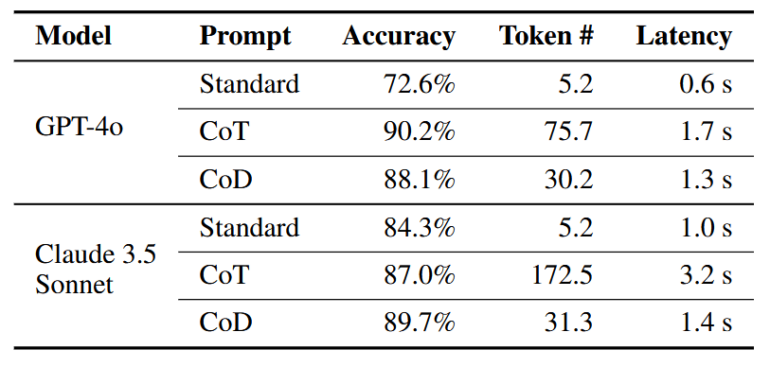

The researchers compared CoD to detailed CoT prompts and standard prompts lacking explanatory steps. In arithmetic, comprehension, and symbolic reasoning tasks, CoD achieved similar accuracy to detailed CoT, but used 68 to 86 percent fewer words.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

For example, when solving comprehension tasks involving dates, CoD increased accuracy compared to standard prompts from 72.6 to 88.1 percent for GPT-4o and from 84.3 to 89.7 percent for Claude 3.5 Sonnet.

Chain of Draft achieves comparable performance while using significantly fewer tokens. | Image: Xu et al.

Chain of Draft achieves comparable performance while using significantly fewer tokens. | Image: Xu et al.CoD reduces computational costs and response times

Chain of Draft directly reduces the number of output tokens by generating shorter intermediate reasoning steps. Additionally, it indirectly lowers input token counts, especially in few-shot prompting scenarios, where multiple solved examples are included as part of the initial input prompt.

When these few-shot examples are created using the concise CoD format, each example becomes shorter, resulting in fewer tokens overall. This combined reduction in input and output tokens lowers computational costs, enables faster responses, and makes CoD particularly valuable for large-scale LLM implementations and cost-sensitive applications.

However, compact prompts are not suitable for every task. Some scenarios require extended consideration, self-correction, or external knowledge retrieval. To address these limitations, researchers propose combining CoD with complementary approaches such as adaptive parallel reasoning or multi-level validation. Additionally, these findings could inform future AI model training by incorporating compact reasoning processes into training datasets.

The Chain of Draft method comes from Zoom Communications' research team, which has offered an "AI Companion" for meeting assistance since 2023. While response latency has often been overlooked in AI applications, CoD could prove especially valuable for real-time situations like video calls.

Recommendation

1 month ago

23

1 month ago

23