Researchers present Janus, a novel AI model that achieves top performance in both understanding and generating images.

A team of researchers has developed Janus, an innovative AI model that combines multimodal understanding and visual generation in a single system. According to the developers, Janus is characterized by its flexibility and performance, which are based on a novel approach to processing visual information.

The main feature of Janus is the decoupling of visual coding for comprehension and generation tasks. The architecture of Janus is based on an autoregressive transformer model. However, unlike comparable models, Janus uses separate encoders for different input types such as text, images for comprehension and images for generation. These encoders convert the raw data into features, which are then processed by the transformer.

According to the researchers, Janus achieves top results in several benchmarks for multimodal understanding and visual generation compared to models of the same size. In multimodal understanding tasks, Janus even outperforms some task-specific models with significantly more parameters, with only 1.3 billion parameters.

Ad

THE DECODER Newsletter

The most important AI news straight to your inbox.

✓ Weekly

✓ Free

✓ Cancel at any time

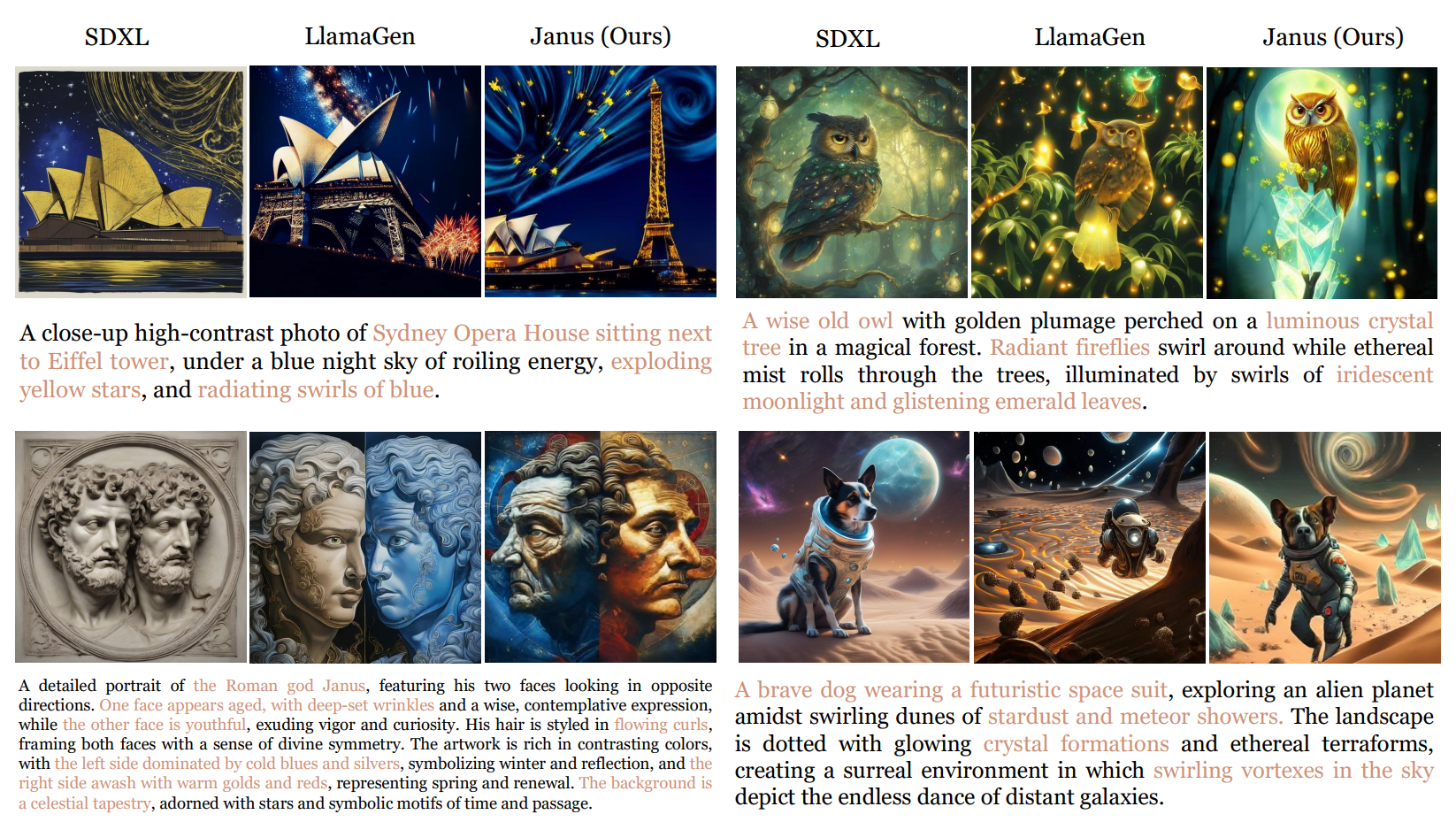

AI-generated images by SDXL, LlamaGen, and Janus, depicting landmarks and animals in various styles and interpretations. | Image: Wu et al.

AI-generated images by SDXL, LlamaGen, and Janus, depicting landmarks and animals in various styles and interpretations. | Image: Wu et al.Janus also shows strong performance in visual generation and outperforms some well-known models such as DALL-E 2. Although the results are far behind current top models such as FLUX in terms of quality, the model is significantly smaller and further scaling should enable better results.

Flexibility as a key feature

According to the developers, a particular advantage of Janus is its flexibility and ease of expansion. By decoupling the visual coding, the most suitable encoders can be selected for comprehension and generation tasks without having to make compromises.

The model can also be easily expanded to include additional modalities such as 3D point clouds, tactile data or EEG signals. This gives Janus the potential to become an even more powerful multimodal generalist model, the researchers explain.

According to the developers, the combination of strong performance, high flexibility and expandability makes Janus a promising candidate for the next generation of unified multimodal models.

More information and the model can be found on GitHub.

Recommendation

6 months ago

48

6 months ago

48